So, I have been playing a lot with

the Open Source SIEM product known as OSSIM, from AlienVault. I have been using it from version 2, mainly

with version 3, and now up to the newest version 4. In that time I have gone through a lot of

frustrations, but I have come to start really enjoy the product. I should state that I just finished my

training in San Francisco and will shortly be certified as an AlienVault

Certified Security Analyst and Engineer, so this post may be slightly biased as

I just learned fully how the product works and interacts and I am still really

excited about it all. To this date I

would say one of OSSIM’s greatest strengths is it customization and

features. I really enjoy the fact that

at its heart it is a lot of open source tools pieced together interacting in

harmony to give you essential visibility in your network. I will say its weakness, if you consider it

that, is the difficulty in the configuration (something I know they are focused

on resolving as 4.0 is a big step in the right direction). However, I feel the difficulty in

configuration is somewhat of a strength for implementation. It forces to the administrator to become

intimately knowledgeable with the SIEM and customize it for their environment; something

I feel any security professional would admit is essential for a SIEM. The downside to this is that it takes a lot

of time and man hours to implement a system like this on a very large network,

which is why I originally state it’s a weakness but it’s all perspective.

Architecture

A bit of a background on how OSSIM

is going to work, it essentially consists of four main components: sensor, database, framework and server. There is also a logger portion that is

provided in the professional version, it’s optimized for long term storage on

an indexed database; however, I will not be talking about it (but it’s very

cool I suggest you check it out). The

sensors are going to be placed closest to devices you want to monitor; these

are going to handle the inputs sent from devices, mostly logs, as well as

provide you with tools to use like snort and OpenVAS. The database is going to be the central

storage of these events, which allows for a lot of the cool features you’ll see

from the server. The server is

essentially the engine behind a lot of the action going on. It handles the vulnerability assessments and

correlation of the events in the database.

The framework is to tie everything together in your mostly easy to use

web-interface.

So for an example you have a LAN

segment you need to monitor. You would

send your firewall/switch logs to OSSIM via syslog most likely and mirror or

tap traffic to send to a second network interface to be assessed by snort. This is handled by the sensor; you would

specify the logs to go into a separate log file and have plugin to monitor that

log file continuously. Snort is

assessing the interface you selected to be in promiscuous mode and analyzes

traffic as, I am assuming, you’re familiar with. Now the plugin catches events either

pre-defined in provided plugins by OSSIM or however you specify in a custom

plugin as I will show later. These

events and events generated by snort are then entered into the database. The server is connected to the database and

then will start correlating these events, again on either predefined

specifications defined by AlienVault or by you.

So to put it programmatically:

If Event(A) = Outcome(A)

Then Search For Event(B) Until

Outcome(B) Or Timeout

If Outcome(A) + Outcome(B) =

Risk(High) Then GenerateAlert(e-mail|ticket) Else ExitLoop

Now correlation can go on for

several events matching a chain of I think up to 10 events. However, that complexity is kind of overkill

and would be looking for events on par with something like APT most

likely. That is the main point of the

server. The framework is going to tie

all these pieces and provide you with Web-Interface to utilize and search all

this information.

Now the great thing about this

architecture is distribution. You can

place sensors all over your environment and have those reporting to the other

pieces as needed. By default OSSIM will

come in an all-in-one fashion which is a lot easier to set up and work with;

but will only work for really small environments. But, the ability for distribution really

allows you implement on the larger scale.

One limitation of OSSIM is it cannot handle a lot of events per second,

that is one of the perks of their professional version; however if you

distribute it over a lot of machines you may be able to work around that

limitation (do not recommend it though).

Customizing Plugins

The greatest thing I love about OSSIM is, once you get how it

works, it’s pretty easy to customize how it works and customize it to your

needs. I will show the simplicity by

providing my own experience and example.

I have been working with Cisco ASA’s for a while now and have been

centralizing logs for some time. I

wanted a better way to interpret this data in an easier fashion then pouring

through 1000s of logs a day, as I am sure many of you are familiar with. This is when I found OSSIM, which has its own

ASA plugin already available; but it didn’t provide a format I liked

personally. The message they provided

for type of event was difficult to differentiate on the fly and did not

anticipate for non-IPs in the logs, as in the ASA you can give IPs a hostname

to easily sort through all the IPs you’ll see often with connection logs. However, as I would soon find out, the

destination and source IP fields can only populate an IPv4 address at this

time; something I greatly hope they can alter and kind of expect to see with

IPv6 support coming from what I’ve read on their forums. So, if you want to see an easier to read name

for an IP you have to disable the naming feature in the ASA, and then define

that IP in your SIEM in the assets portion of the system. So, lets dive into building a custom plugin

for an ASA, but the methodology will translate to any log format you can

populate.

First you’ll need to configure your source to send logs to an

OSSIM server, where they have syslog already listening for events by

default. However when we do that it’s

just going to append that message to

/var/log/syslog which can create a lot of messages, so it would be ideal

to separate logs from syslog and place them in their own file. We can do this by creating a configuration

file in /etc/rsyslog.d so we can do

this:

- vi

/etc/rsyslog.d/asa.con

- Insert Line

1: if $fromhost-ip == 192.168.9.101 then

-/var/log/cisco-asa.log

- Insert Line

2: if $fromhost-ip == 192.168.9.101 then

~

Now, what this does is create a configuration file for your

ASA, which rsyslog is configured in OSSIM to utilize any *.conf file you have

created in that folder. The first line

says if the log comes from 192.168.9.101 (our ASA IP) then place that log in

cisco-asa.log. Line two matches the IP

as well, but then states place that IP in only cisco-asa.log and not syslog as

well. Now you’re almost set here and

have logs coming in, but first you’ll want to configure log rotation at

/etc/logrotate.d/ Now for this plugin

since it’s a standard syslog, I simply added the log file to the rsyslog

rotation already enabled. This is setup

for rotating the log on a daily basis so your storage is maximized.

Now that you a defined log source, you can now build your

plugin around that information. Plugins

are all stored in a central location at:

/etc/ossim/agent/plugins/ Here I

will create a file called custom-asa.cfg

Take a look at the beginning portion of this configuration file:

;;

custom-asa

;;

plugin_id: 9010

;;

;; $Id:

*-asa.cfg,v 0.2 2012/7/29 Mike Ahrendt

;;

;; The

order rules are defined matters.

;;

[DEFAULT]

plugin_id=9010

[config]

type=detector

enable=yes

source=log

location=/var/log/cisco-asa.log

create_file=yes

process=

start=no

stop=no

startup=

shutdown=

This is a pretty straight forward implementation, you have a

plugin ID of 9010 (all plugins 9000+ are saved for custom plugins). Then you simply have that this is a detector

plugin that is enabled. Next we define

that our source file is a log that is in the location we created earlier, and

if necessary can create the file. The

next portion is not really relevant to our purpose, but is utilized by plugins

for tools that are incorporated in OSSIM.

For instance the OSSEC plugin has processes tied to it, so when the

plugin is ran it can start the OSSEC process via the plugin automatically. Again, this is not needed for us. Also note that ;; is a form of commenting

your plugins and not necessary just kind of helpful.

Now, for the really fun part of the plugin creation process,

REGULAR EXPRESSION!!!! Admittedly, I

knew nothing about regular expression and after learning to build these plugins

I have learned what I am really missing.

If you’re not already familiar with regular expression you should keep a

cheat sheet available (I recommend this one: http://www.cheatography.com/davechild/cheat-sheets/regular-expressions/). The next part of the plugin is reliant upon

building regular expression around common format log files. So looking at this log we would see something

like this:

Jul

2 10:23:00 192.168.9.101 %ASA-0-106100: access-list 129-network_access_in

denied tcp 129-network/198.110.72.148(61388) -> Secure-135/ DB-136(1521)

hit-cnt 1 first hit [0xbbdcff06, 0x0]

Now we need to build a regular expression to match this input

for all logs. Starting with the date, it

is pretty easy. We have Jul 2 10:23:00

so our regular expression looks like this \D{3,4} (not a number for 3 or 4

characters) \s (space) \d\d (two digits) : \d\d : \d\d put together we have,

with the defined variable the AlienVault plugin:

(?P<date>\D{3,4}\s\d\d:\d\d:\d\d)

Now for the IP Sensor IP of 192.168.9.101, we build \d{1,3}

(digit of 1 to 3 digits in length) . \d{1,3} . \d{1,3} . \d{1,3} Adding to the plugin now:

(?P<date>\D{3,4}\s\d\d:\d\d:\d\d)\s(?P<sensor>\d{1,3}.\d{1,3}.\d{1,3}.\d{1,3})

Adding the ASA Syslog ID we input \s (space) exact %ASA- \d

(one digit) - \d+ (digits until there is no longer a number) . (any character)

\s now our expression becomes:

(?P<date>\D{3,4}\s\d\d:\d\d:\d\d)\s(?P<sensor>\d{1,3}.\d{1,3}.\d{1,3}.\d{1,3})\s%ASA-\d-\d+.\s

Now for the non-standard stuff with variables for OSSIM, I

matched access-list exactly then using \S+ (all non-whitespace until a space)

for a variable. Now for these logs you will next see permitted or denied. So I assign the next portion to a variable

and we’ll see why that’s important in a bit.

Next you’ll see the defined protocol in a variable. Then the network on which the transmission occurred,

which I define as the interface in OSSIM.

Next I define the source IP and Port then destination IP and port. All coming together as:

"(?P<date>\D{3,4}\s\d{1,2}\s\d\d:\d\d:\d\d)\s

(P<sensor>\d{1,3}.\d{1,3}.\d{1,3}.\d{1,3})\s%\D\D\D-\d+-\d+.\saccess-list\s

(?P<msg>\S+)\s(?P<sid>\w+)\s(?P<proto>\w+)\s(?P<iface>\S+)\/(?P<src>\S+)\

((?P<sport>\d+)\)\s..\s\S+\/(?P<dst>\S+)\((?P<dport>\d+)\)"

In the plugin we will assign this to a rule like so:

[AAAA -

Access List]

regexp="(?P<date>\D{3,4}\s\d{1,2}\s\d\d:\d\d:\d\d)\s(?P<sensor>\d{1,3}.\d{1,3}.\d{1,3}.\d{1,3})\s%\D\D\D-\d+-\d+.\saccess-list\s(?P<msg>\S+)\s(?P<sid>\w+)\s(?P<proto>\w+)\s(?P<iface>\S+)\/(?P<src>\S+)\((?P<sport>\d+)\)\s..\s\S+\/(?P<dst>\S+)\((?P<dport>\d+)\)"

event_type=event

date={normalize_date($date)}

sensor={resolv($sensor)}

plugin_sid={tranlate($sid)}

src_ip={$src}

src_port={$sport}

dst_ip={$dst}

dst_port={$dport}

interface={$iface}

userdata1={$msg}

Now you may notice some interesting things in this

plugin. First note that I start the rule

with AAAA, however you’ll need to assign plugin SIDs in numbers, the engine

parses these rules alphabetically and using numbers makes for a difficult

experience in processing your rules in the order you desire. Therefore, I suggest you use letters to

assign your rule names for the engine.

We also see that there are functions before some variables. These are built into OSSIM and are a part of /usr/share/ossim-agent/ossim_agent/ParserUtil.py There are several functions but the major

ones are in this plugin. Normalize_date

is self-explanatory I believe. Resolv

will simply parse the IP and normalize it.

Translate is great if you have similar log messages. If you’ll recall this log varies in the fact

it varies at permitted or denied. So

with translate we take the $sid variable and it will read that variable and

then translate it to something else via the translation portion of the plugin

which looks like this:

[translation]

permitted

=600

denied =

601

inbound

= 603

outbound

= 604

So you’ll see that the plugin will translate the word to

either 600 or 601 which we will then use for the rule identifier. Now this is just one rule of the plugin I

wrote. I have built a plugin to match 19

of the most common logs I have found so far.

Looking through ASA documentation, the ASA-\d-\d\d\d\d\d\d format is

unique to each log type. Specifically

the first three digits in the 6 digit number are semi-generic to various types

of events. Example, if we see

%ASA-6-212\d\d\d we will know that the plugin has something to do with

SNMP. So it would be possible to build

events around those specifications, which is what I did for some rules but they

were events that are non-standard for my environment. So, I am seeing the events I just don’t get

the granularity in the OSSIM interface as I do for the events that I really

want to see.

Now that there is a plugin configuration created with all our

rules, we almost have a working plugin. Now

we need to build a SQL file so that the database knows what the plugins are and

will generate the event up a match.

Fairly simple, lets take a look at the SQL:

-- GRCC

ASA Plugin

--

plugin_id: 9010

DELETE

FROM plugin WHERE id = "9010";

DELETE

FROM plugin_sid WHERE plugin_id = "9010";

INSERT

IGNORE INTO plugin (id, type, name, description) VALUES (9010, 1, 'custom-asa',

'Custom ASA plugin');

INSERT

IGNORE INTO plugin_sid (plugin_id, sid, category_id, class_id, reliability,

priority, name) VALUES (9010, 600, NULL, NULL, 2, 2, 'Access List Permitted');

INSERT

IGNORE INTO plugin_sid (plugin_id, sid, category_id, class_id, reliability,

priority, name) VALUES (9010, 601, NULL, NULL, 2, 4, 'Access List Denied');

The first two lines are simple comments, and not required for

the SQL file. The next two lines are to

delete possible redundancies from the database.

Next we insert the plugin ID to the database. Now come all of our rules, we simply give it

the database fields of plugin_id (which we assigned 9010), then the rule ID you

want (from the translation table you’ll remember I assign these rules to 600

and 601), then you insert a category_id and class_id (not necessary so I’ve

assigned them as NULL). Now reliability

and priority are pretty important. This

is how OSSIM will assess the risk posed by these events. Something like a permit, not necessarily

something to freak out about so I assign its priority to 2. A deny however is more important to me so I

assigned it a 4, as I want those to stand out more. The name is self-explanatory, just a description

of what the rule is. We then create a

custom-asa.sql file at /usr/share/doc/ossim-mysql/contrib/plugins/ Now all that is left is entering the SQL

into the DB with a command, which there are a few options to execute from the

sql plugin directory mentioned prior:

- cat

custom-asa.sql | ossim-db

- ossim-db

< custom-asa.sql

Either command should work; I favor the latter form

personally. I did notice in version 3

sometimes it would take a few times before I would see the plugin come up in

the setup interface. However, in 4 it

worked first time around with no problem.

We can verify that the plugin injected into the database properly by

logging into the web interface. Going to

Configuration > Collection > Data Sources where we can then search for ID

9010. It will look like this:

There we have it! Our

plugin is registered within the database and recognizing the right rules. We also will see in the console setup, by

logging into OSSIM’s terminal and entering “ossim-setup” into our command line,

then selecting “Change Sensor Settings” followed by “enable/disable detector

plugins” we should see an option for “custom-asa” as well:

Now you simply add the check mark to the plugin and then hit OK and then you'll reconfigure the server through "ossim-setup". And voila! We have what should be a working plugin. But, how can we verify this you ask?

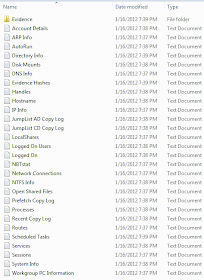

We troubleshoot our OSSIM

plugin with some ease. You will

need a few things: a few consoles open (I

suggest using PuTTY), a sample of the logs you are trying to parse, and some

time. First, you’ll want to open up

three terminals. I like to make it look

something similar to this:

This will give you two streams of information to follow when

you inject the sample log into the monitored log. The agent.log will display messages regarding

the plugin parsing and server.log will notify about added database

messages. Now we need to start injecting

the sample into the cisco-asa.log. Because

the plugin monitors in real-time, you can’t simply throw a log in and parse it

from the beginning you have to inject it into the process. This is easily done, I recommend taking a

sample from your already collected logs and perform a copy and name it something

simple (in the screen above you may see I named my copy INJECTABLE). Now once we have a copy we simply perform

this command: cat /var/log/INJECTABLE

>> /var/log/custom-asa.log When

this is complete you will see that the agent log will start parsing all of the

events and then the server log will show all the events that were added to the

database.

So now we’ve gone through and turned those horrible logs we’ve

seen earlier into this (sanitized) view:

As you'll see we have the a list with the signature we've created, the time, the sensor is the IP of the actual ASA, your source IP and Port with country if an external IP, destination IP and ports as well, your asset values and your associated risk. The 0.0.0.0 is what occurs because I use non-standard IPs in my ASA configuration. It converts the host name to 0.0.0.0; however, if you click into the event it will show the whole log with the proper information. This is the point I wish OSSIM would change, but it's only for my own personal preference. As I said, you can turn it off in the ASA and then assign the IPs you gave host names to an asset tag that will give you an even easier to read event in the SIEM.

As you can see OSSIM is pretty powerful. I highly recommend you give a look, as I think it will be improving a lot in a short period. You can go to the community AlienVault site, where OSSIM is hosted at:

http://communities.alienvault.com/community/. You can find their documentation on building a plugin here:

http://www.alienvault.com/docs/AlienVault%20Building%20Collector%20Plugins.pdf.

Also, if you check out OSSIM and want to try my plugins you can download my current implemented SQL and configuration files. I think they're pretty good, I have to update it a little bit to cover all the ASA log types and I also want to build more defined rules (but this is a a start to get you going and customizing for your own needs). Also, now that I have more defined rules I will be able to build correlation rules for them; but more on that later.